1 Million Downloads of shisa-gamma-7b-v1!

Exactly, one year ago, Sakana AI first published Evolving New Foundation Models: Unleashing the Power of Automating Model Development, which used our shisa-gamma-7b-v1 as the Japanese model backbone for all their evolutionary model merges. Their paper was accepted and published in Nature Machine Intelligence this January. Congrats!

Both the original and follow-on work drove a significant number of downloads for shisa-gamma-7b-v1, and being the top-ranked open model on Weights and Biases’ Japanese Nejumi LLM Leaderboard for most of last year didn’t hurt its popularity either.

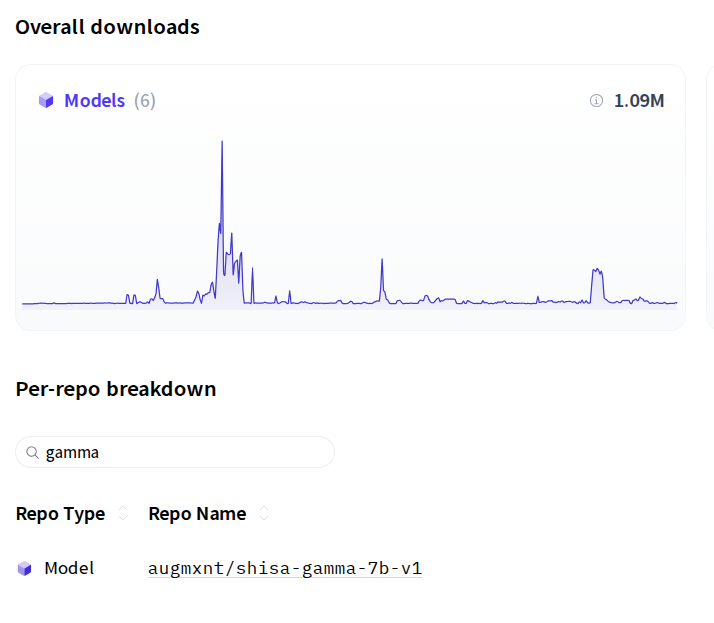

While it’s not quite 1 billion, recently I was taking a look at our HF stats and was suprised to see that it had actually exceeded 1 million downloads!

Now of course, most of these downloads were likely automated, but still, even now, there continues to be a large number of monthly downloads. It’s been amazing, but also a little alarming to see such consistent high interest, especially as many stronger models have been published.

Of course, I wouldn’t suggest that you stop using shisa-gamma-7b-v1 without offering one of our own new models as an alternative!

Take a peek at what we’ve been cooking up with shisa-ai/shisa-v2-llama3.1-8b-preview

It is our strongest model yet, beating not only all previous 7-8B class models, but even the Llama 3 70B tune we released last year:

| Release Date | Model Name | Overall Avg | JA Avg | EN Avg | Shaberi Avg | shisa-jp-ifeval | llm-jp-eval | shisa-jp-rp-bench |

|---|---|---|---|---|---|---|---|---|

| 2025-03 | shisa-ai/shisa-v2-llama3.1-8b-preview | 62.46 | 70.35 | 54.58 | 7.18 | 0.19 | 0.56 | 4.67 |

| 2024-05 | shisa-ai/shisa-v1-llama3-70b | 60.70 | 68.45 | 52.95 | 6.82 | 0.24 | 0.56 | 4.51 |

| 2024-05 | shisa-ai/shisa-v1-llama3-8b | 50.09 | 57.37 | 42.80 | 6.30 | 0.09 | 0.23 | 4.26 |

| 2023-12 | augmxnt/shisa-gamma-7b-v1 | 37.37 | 53.94 | 20.80 | 5.49 | 0.13 | 0.52 | 3.22 |

| 2023-12 | augmxnt/shisa-7b-v1 | 29.04 | 36.92 | 21.17 | 3.51 | 0.15 | 0.46 | 1.82 |

Keep in mind, this is still very much a WIP (ablation-92, to be exact) and there’s plenty of juice left to squeeze. We have more data, more tuning techniques, and more evals before we release our official shisa-v2 models. Still, if you’re looking for more efficient tokenization, a larger context window, and an overall significantly more capable model with class-leading creative writing, multi-turn conversation, and instruction following, why not give the new shisa-v2-llama3.1-8b-preview a download?

We all stand on the shoulders of others, and the shisa-gamma-7b-v1 model is no exception. It literally wouldn’t have been possible to make what we did without the contributions of the research and open-source AI community. Special thanks to the teams at Mistral for open sourcing the Mistral 7B base model, Stability AI Japan for the Japanese StableLM Base Gamma 7B continued pre-train, and Jon Durbin for his work not just on co-training the original Shisa V1 models, but also for his work on airoboros, one of the OG synthetic data tools that helped us on our path.

Of course, we’re also grateful for the continued support of the Japanese and open-source AI communities. We’re still just getting started, so watch this space for more to come soon.