Sakana AI Evolves Models with shisa-gamma-7b-v1

Sakana AI just published some exciting new work on Evolutionary Model Merges of LLMs, applying evolutionary techniques to dicover optimal ways of combining different models. Their research demonstrates how evolutionary algorithms can automatically create new foundation models with specific capabilities by combining existing open-source models from different domains.

To our complete surprise, we were incredible pleased to discover that they used our own shisa-gamma-7b-v1 instruction-tuned model as the Japanese backbone model for all of their evolved models (which explains the recent uptick in model downloads we’ve been seeing)!

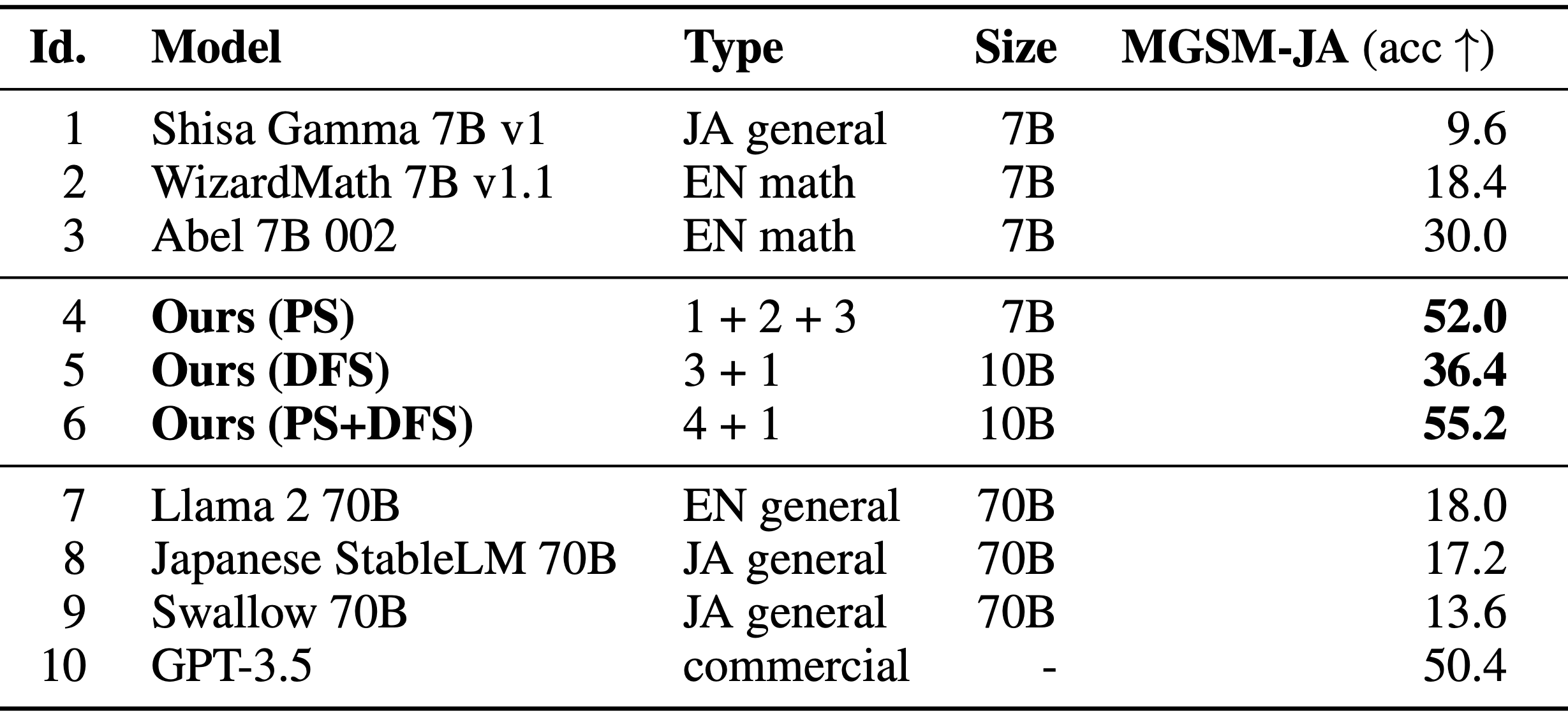

Their evolved Japanese Math LLM achieved state-of-the-art results on multiple Japanese LLM benchmarks, even outperforming some 70B parameter models. Congrats again to the Sakana AI team, and we’re glad to see our model being used in such an innovative way!